Programming Project #4 (proj4)

COMPSCI 180 Intro to Computer Vision and Computational Photography

Chuyan Zhou

This webpage uses the Typora Academic theme of markdown files.

Part A

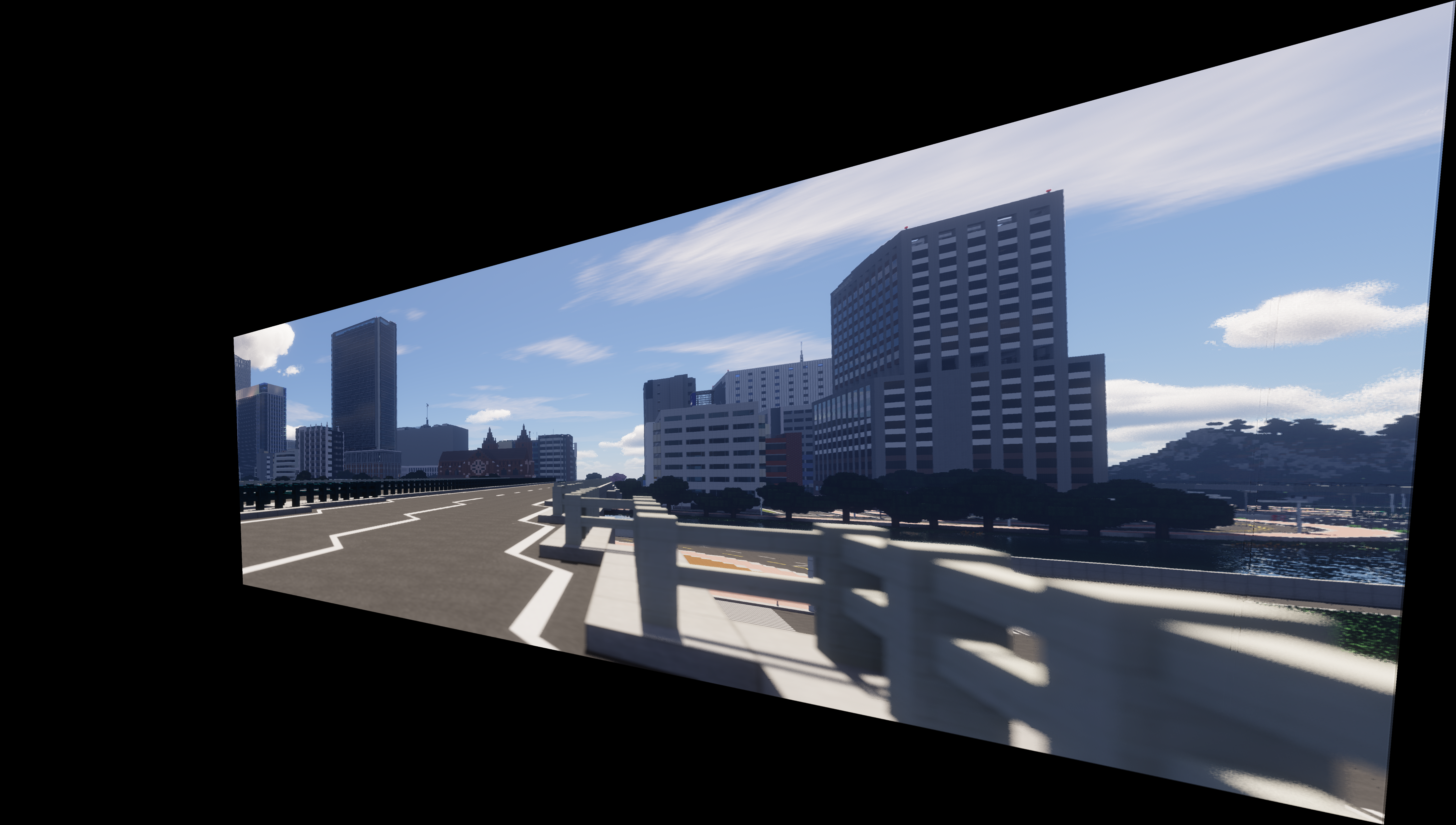

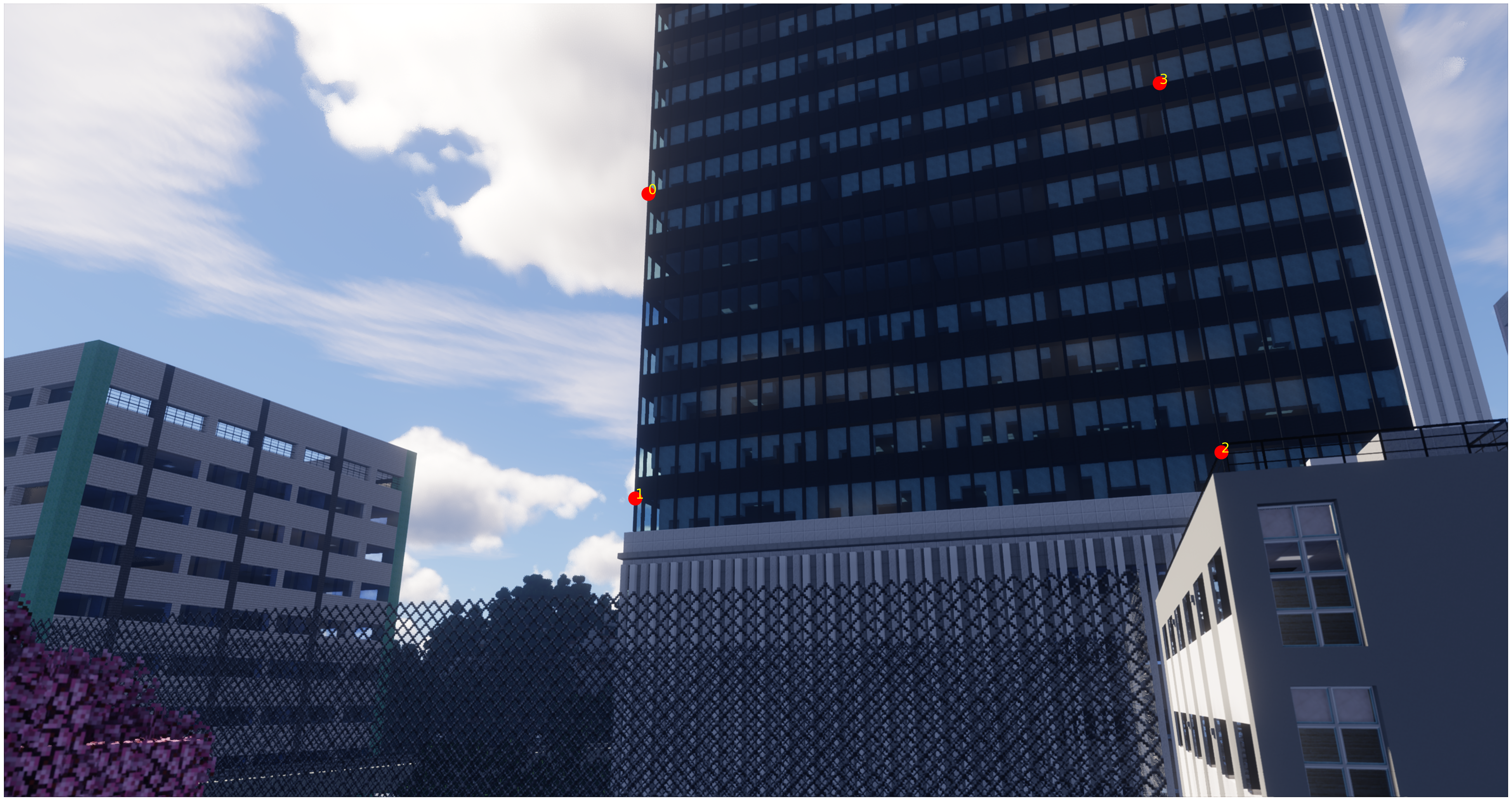

1. Shoot the pictures

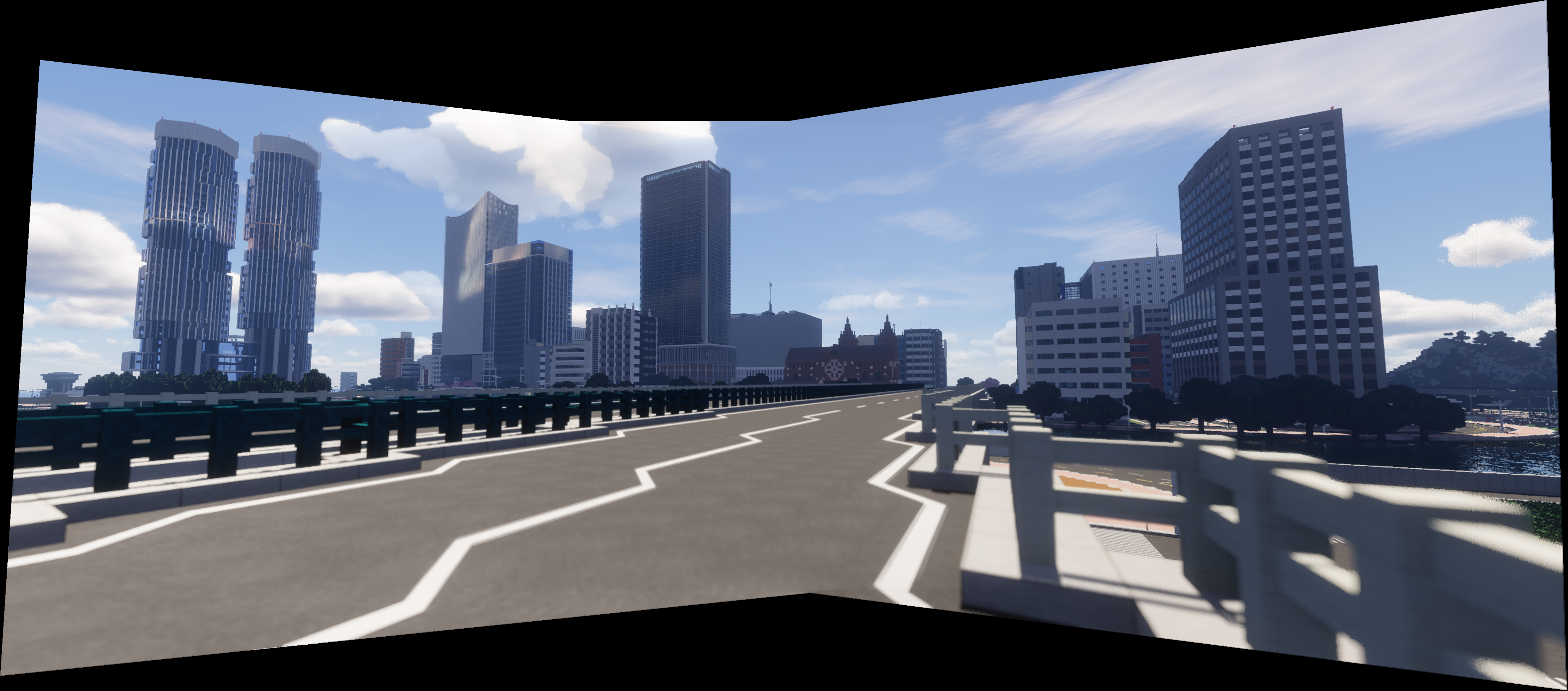

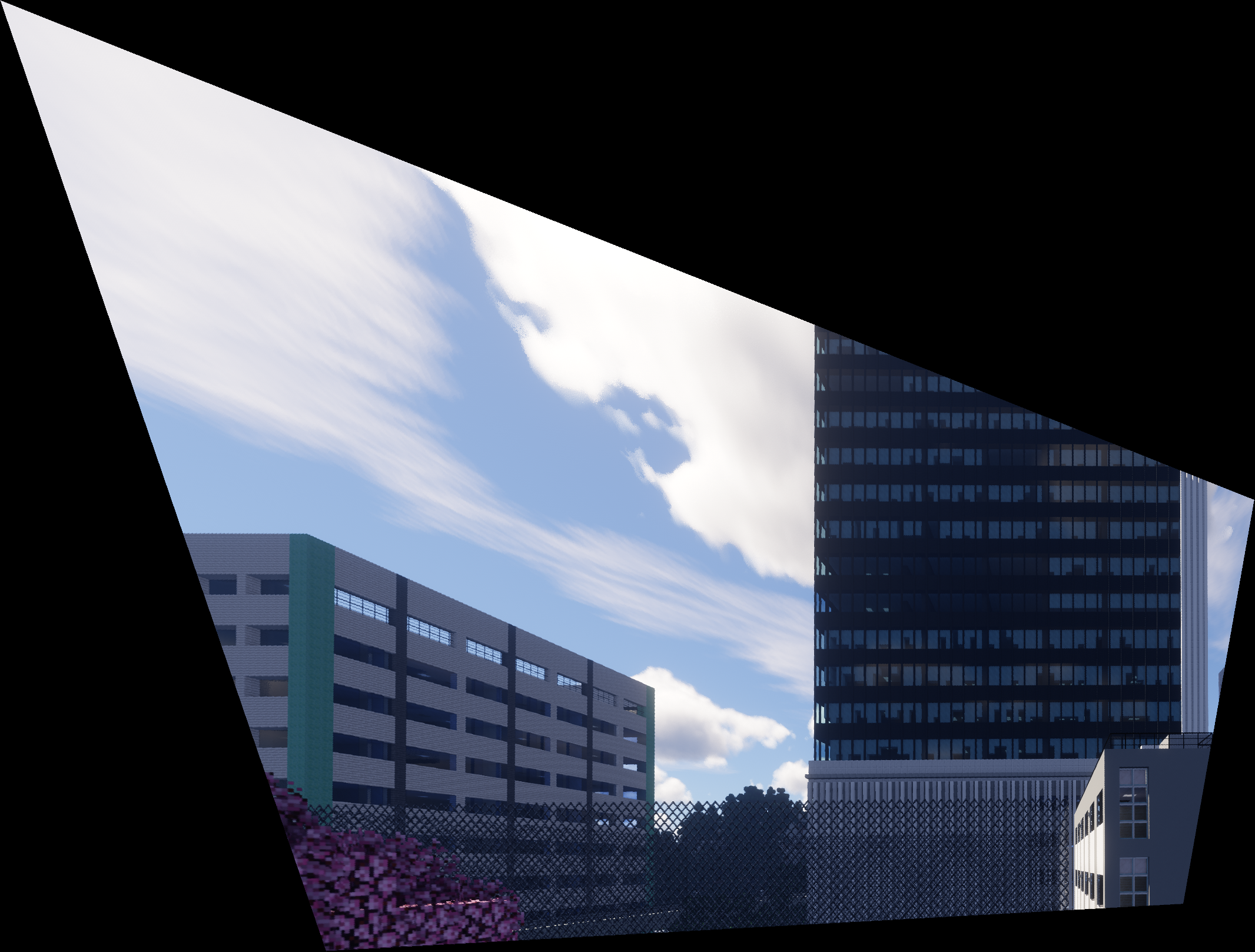

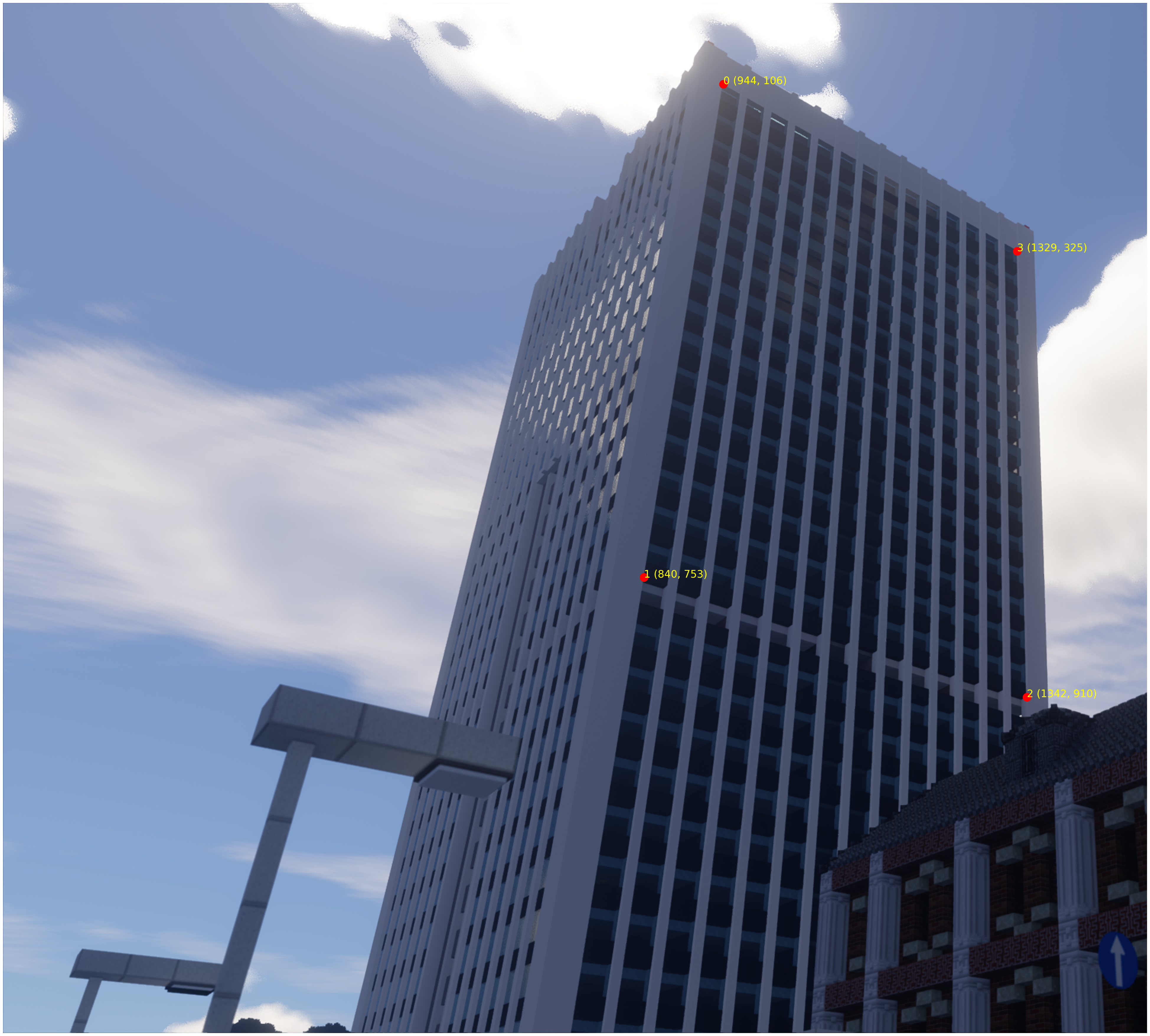

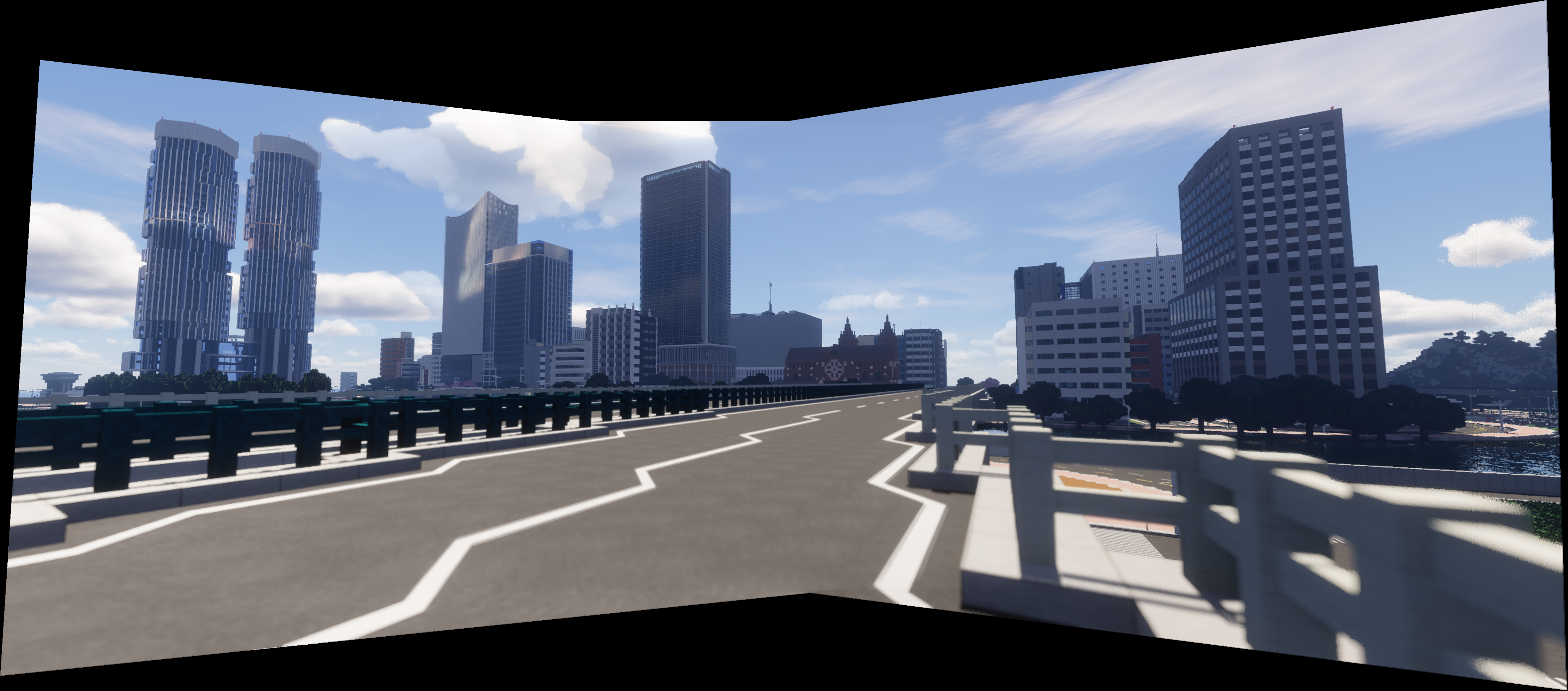

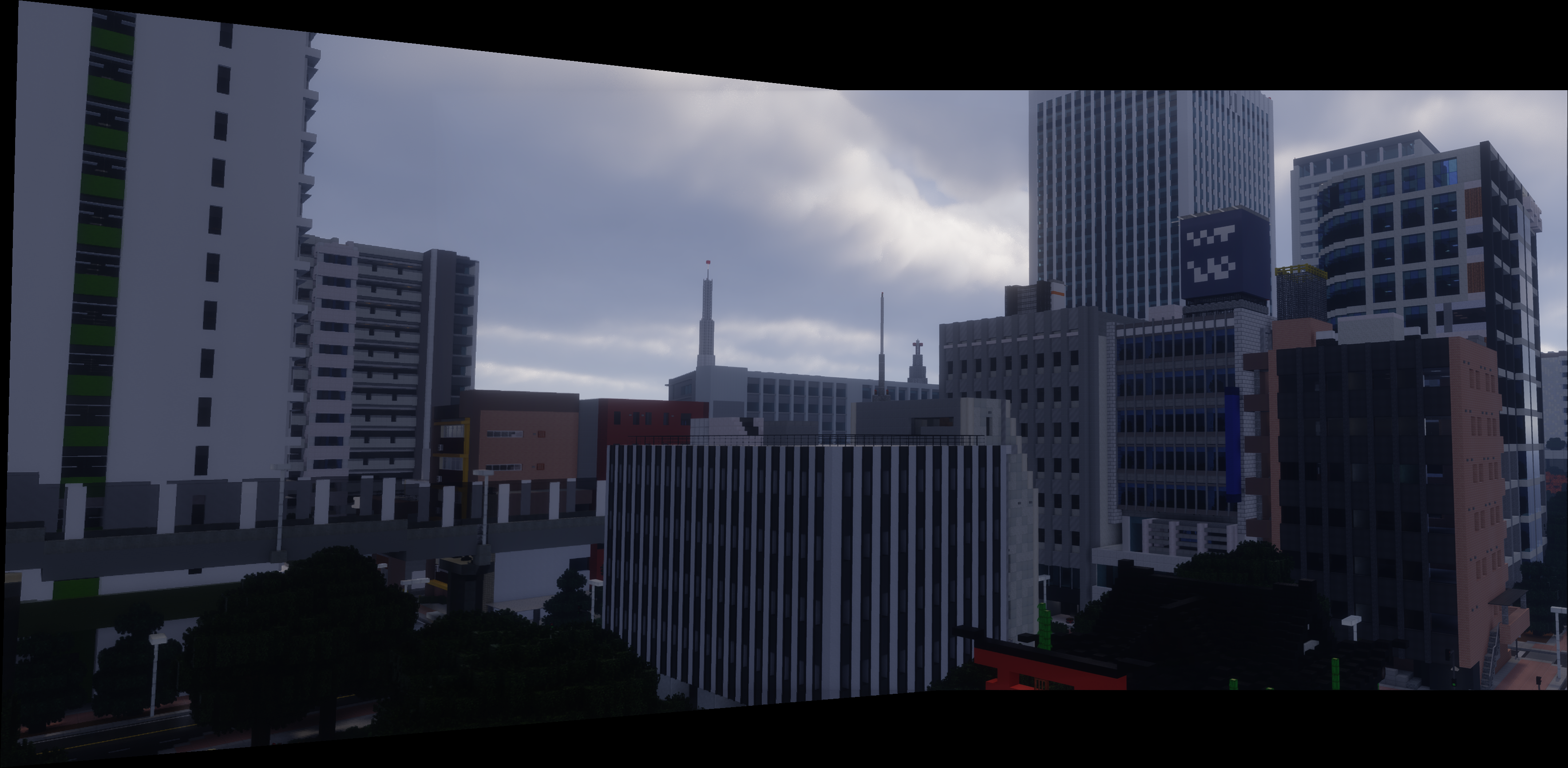

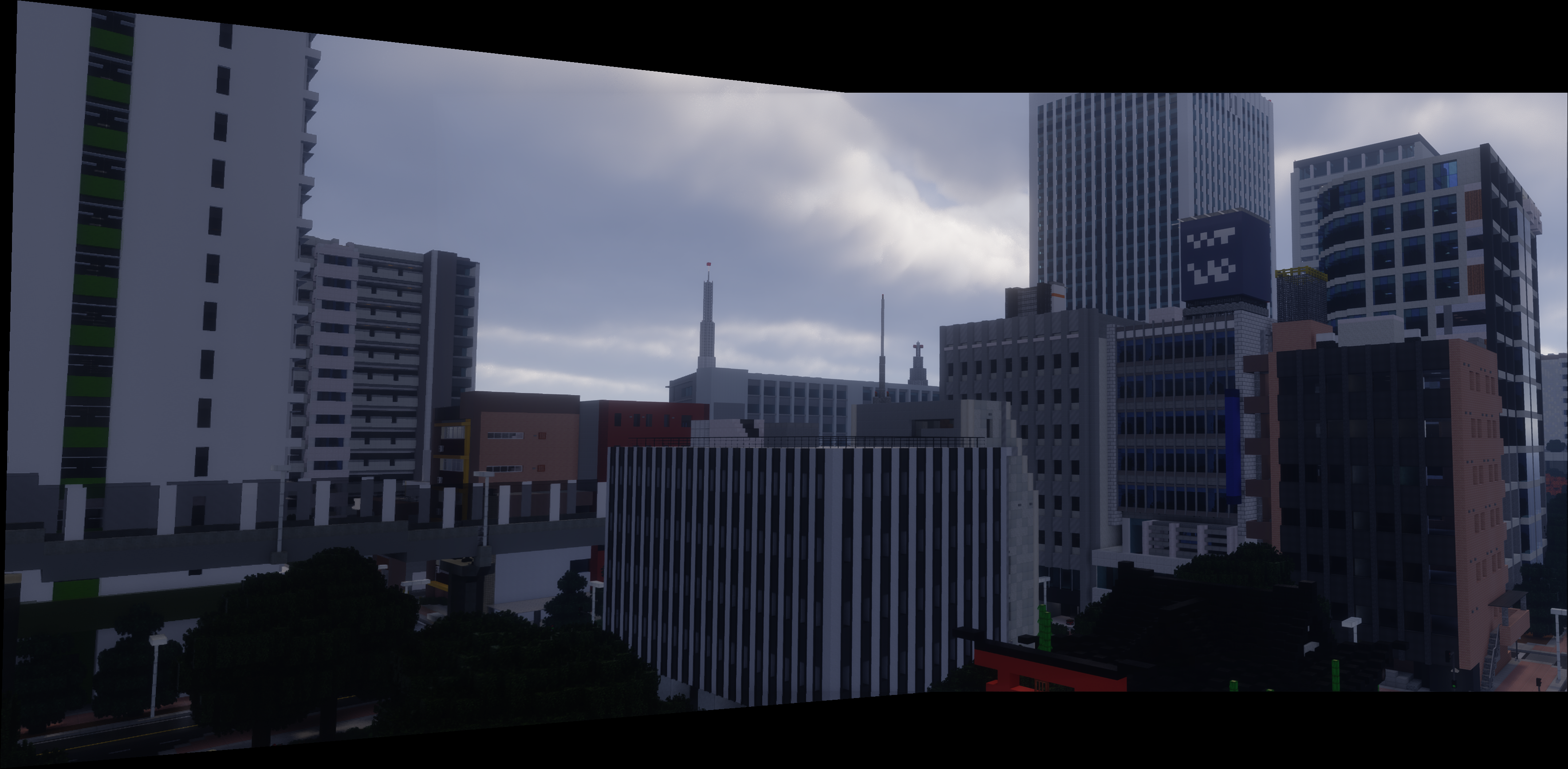

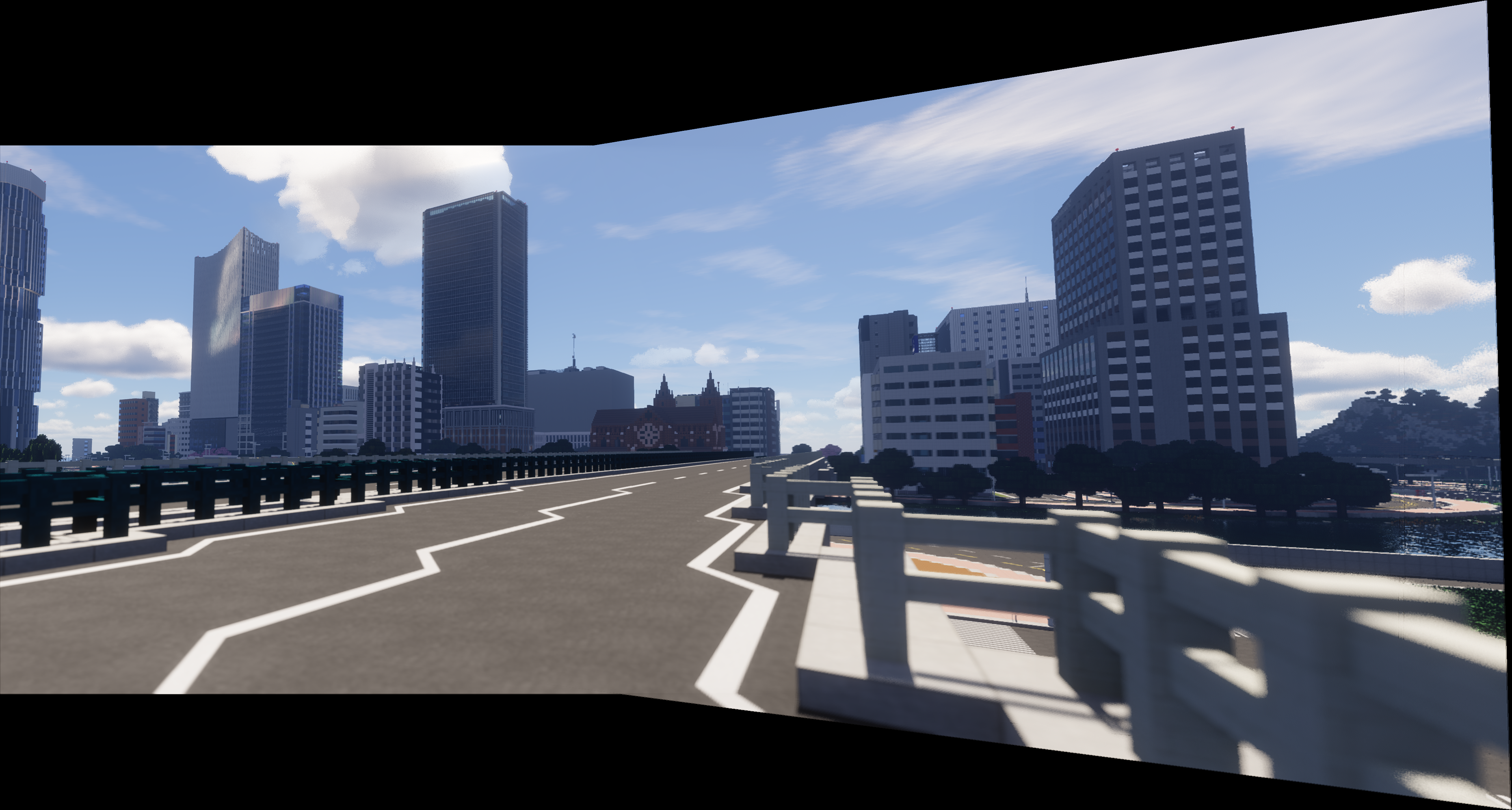

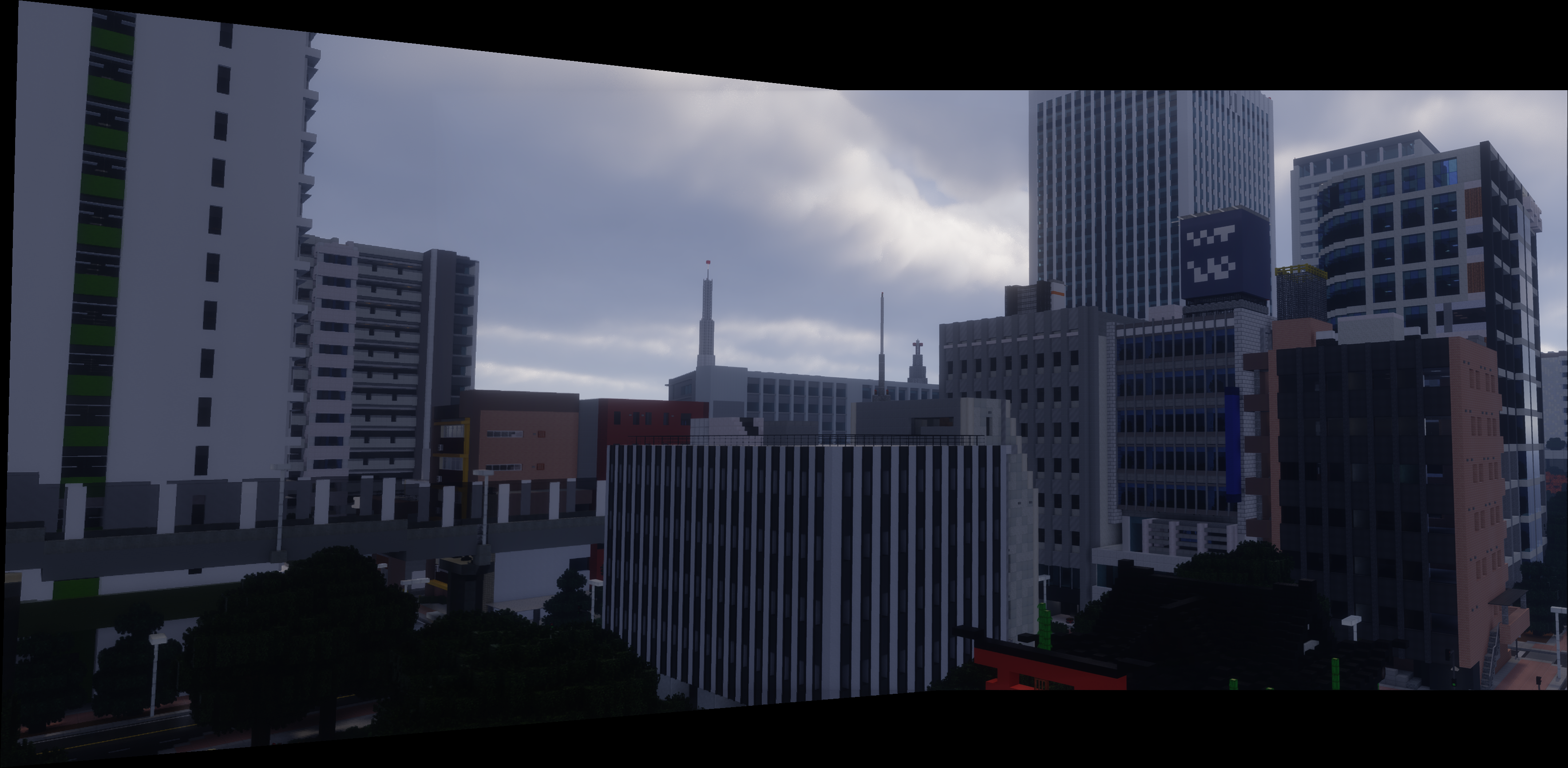

I took two pictures of one place in my Minecraft world. It's visible that the two pictures have different perspectives, and there is a projective relationship between the two pictures, especially embodied on the surface of the main building. The two pictures are shown below:

I think the effort of configuring & taking photos in games with perspective properties may be seen as one kind of B&W in the "own idea" section.

2. Recover Homographies

Given there is a projective relationship between these two images, we know that we can use a homography matrix

Given a point

where

having 8 degrees of freedom and

and the transformation equation can be expanded as

which can be collapsed into

where

3. Warp the images

After we have the homography matrix

For each pixel in the target image, we can use the homography matrix to find its corresponding pixel in the source image.

We can use the bilinear interpolation to find the values for the non-integer pixel positions in the source image.

Because there are negative pixel positions in the source image, we can use a translation matrix

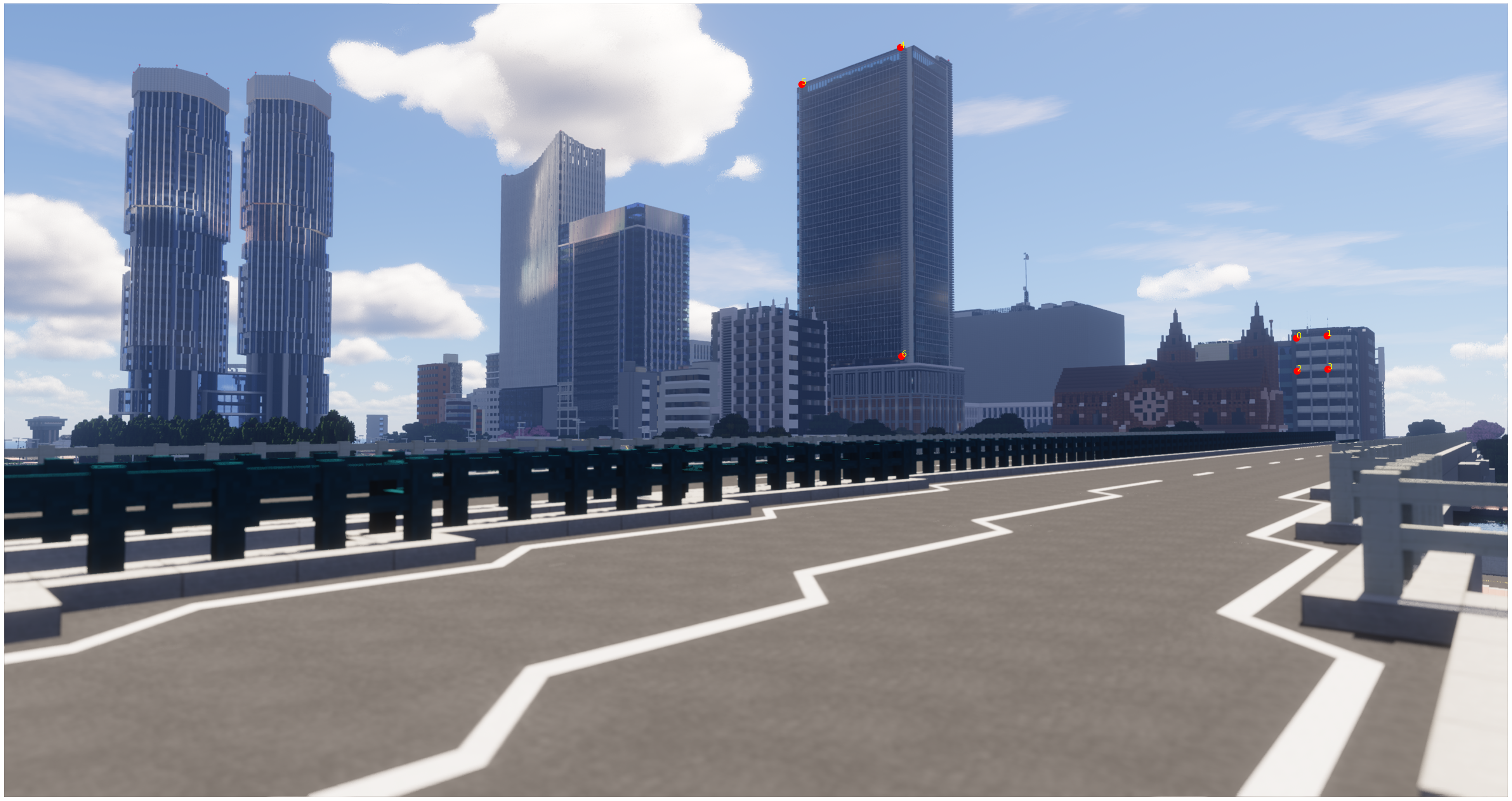

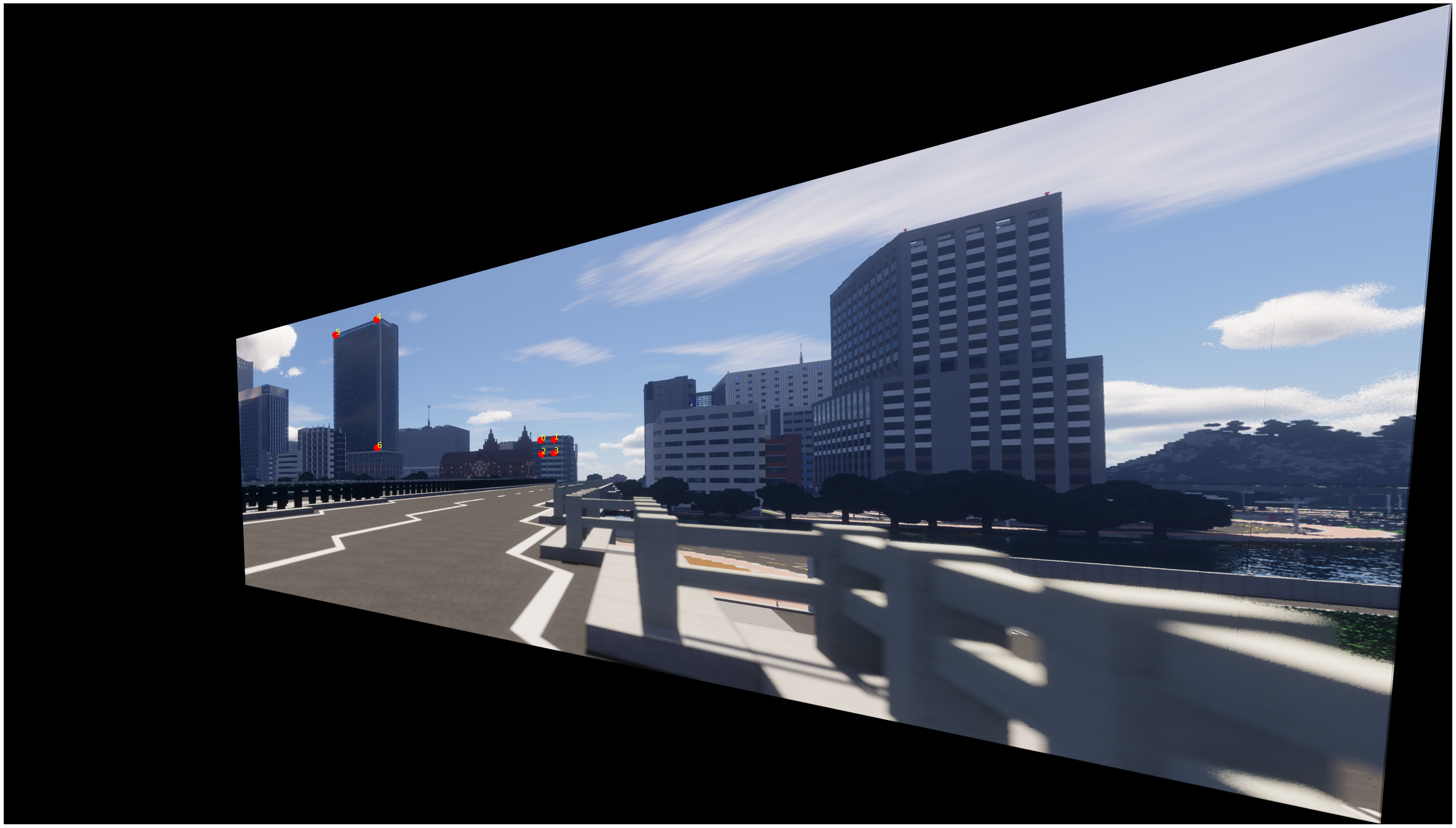

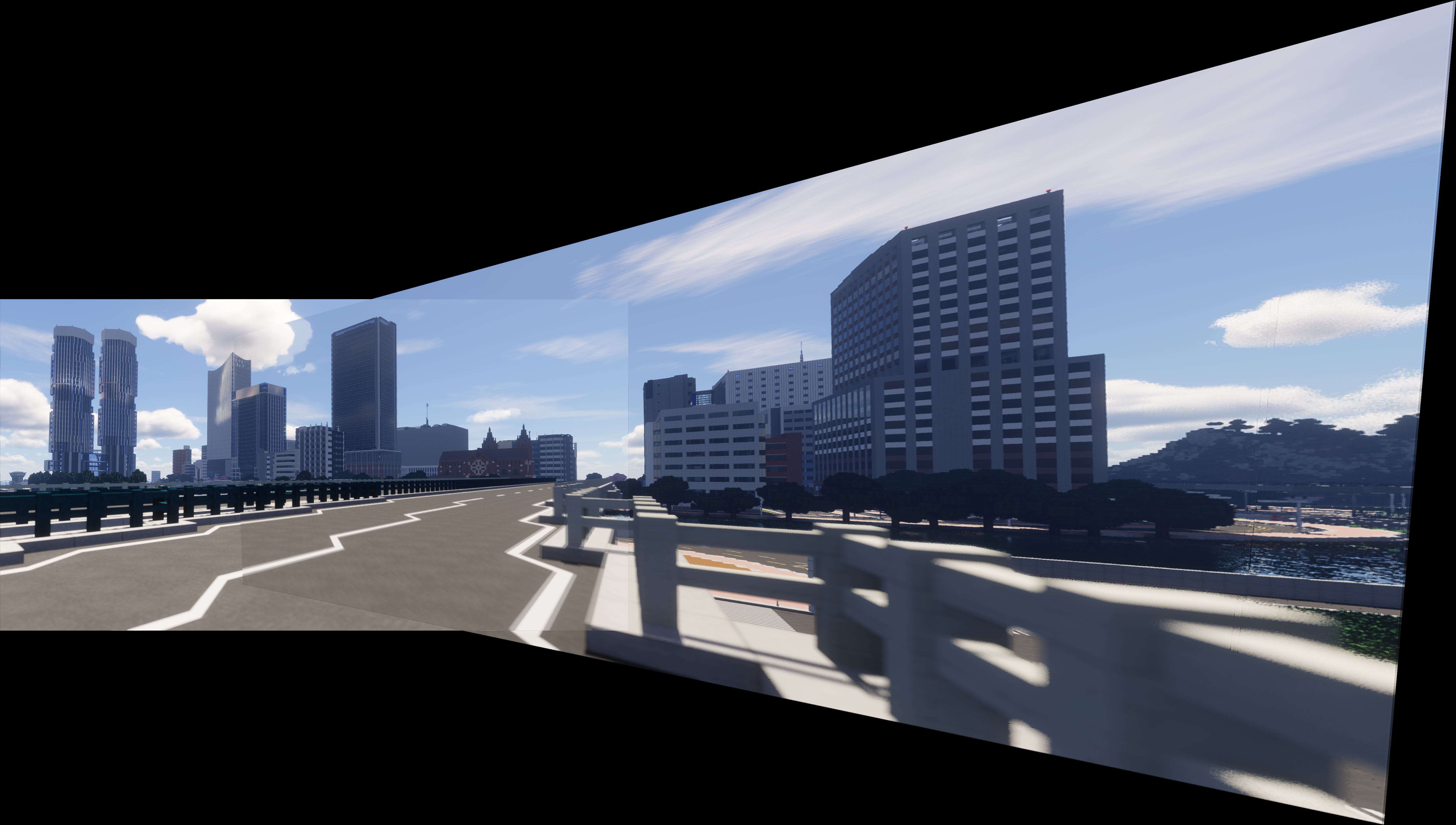

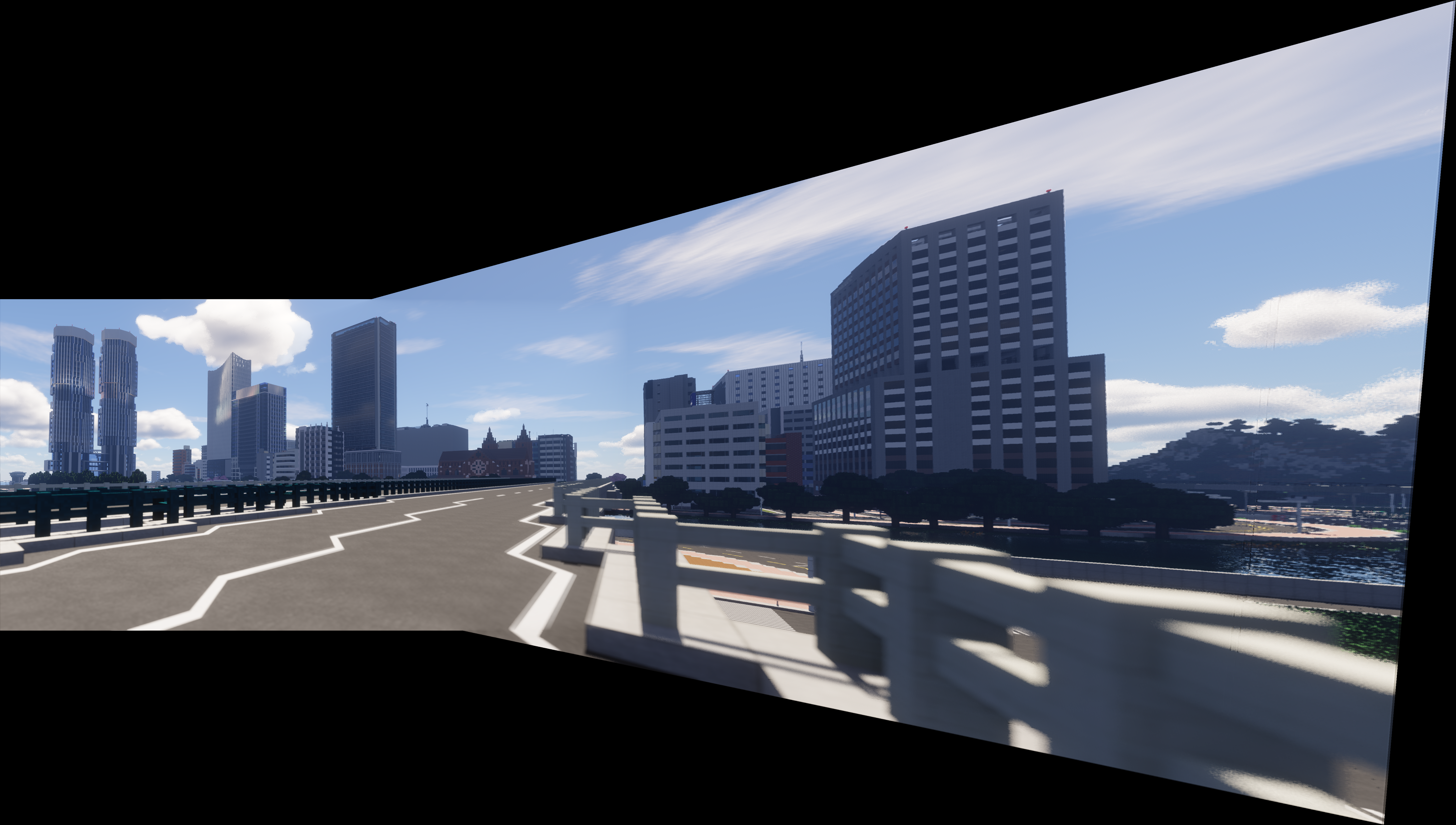

We warp image 1 to 2 now.

Here is the version without the correspondence points:

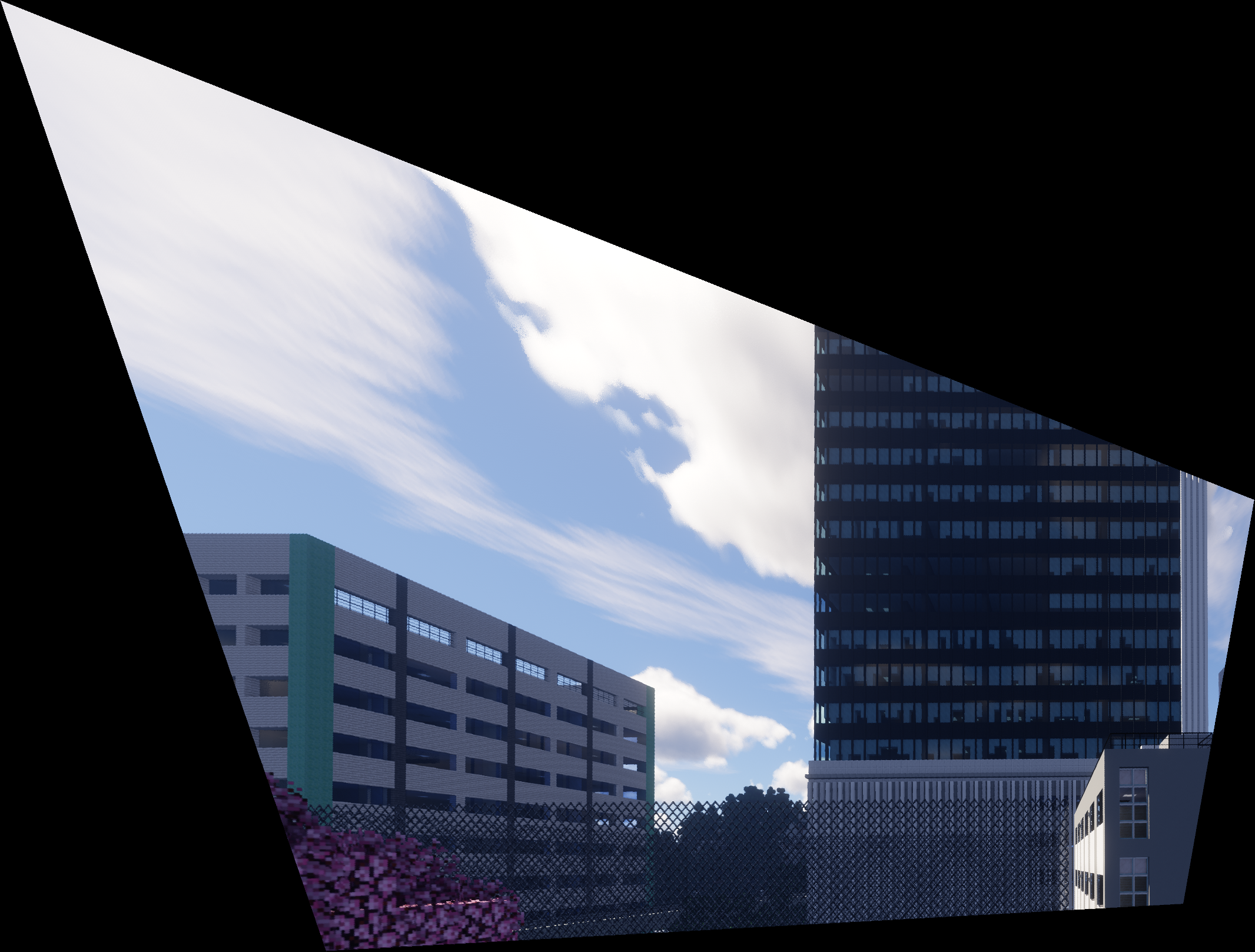

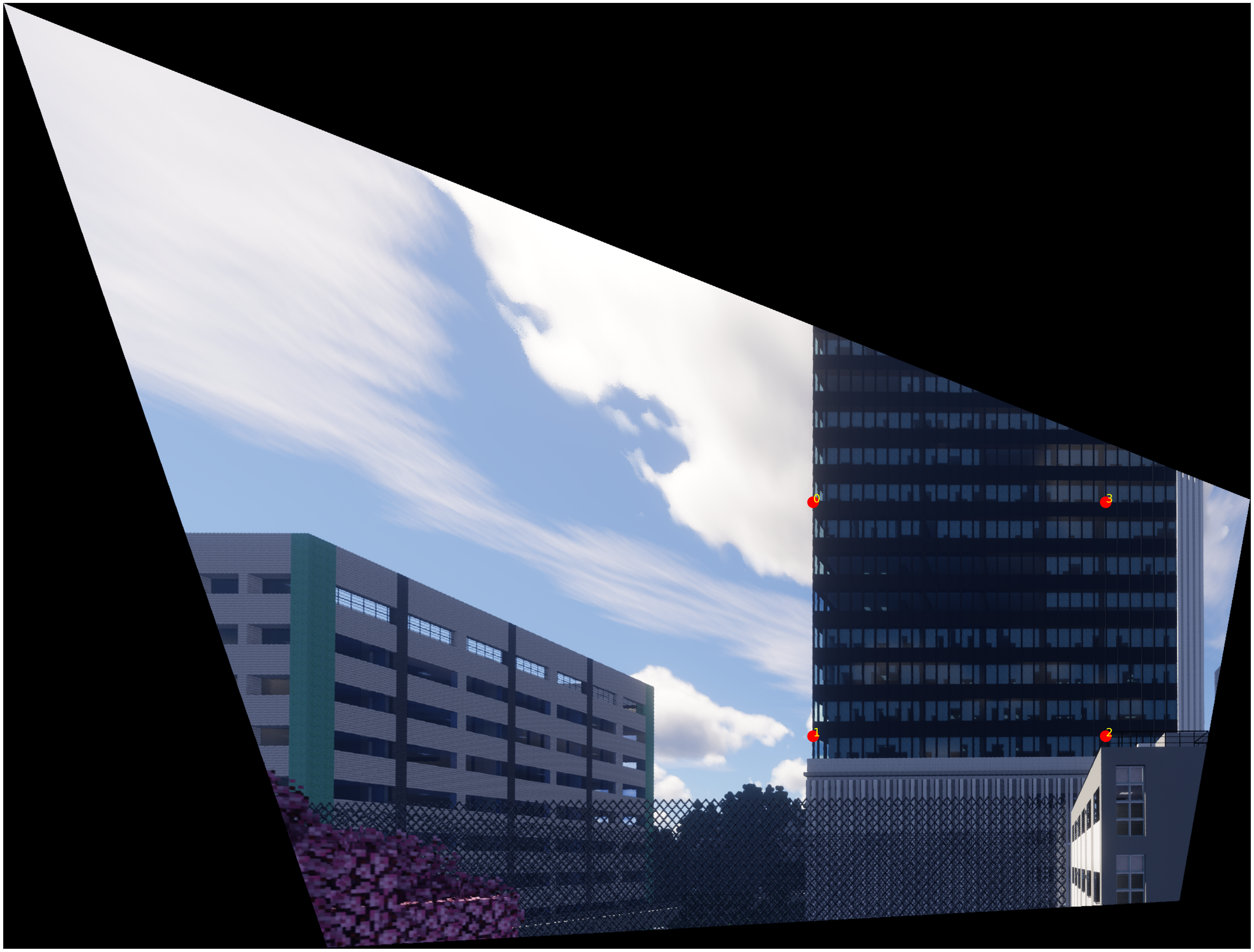

4. Image Rectification

In this part, we choose 4 points from one original image, and warp the image to make the 4 points become a rectangle.

4.1 Rectified Image 1

A large rectified image as above:

4.2 Rectified Image 2

5. Blend the images into a mosaic

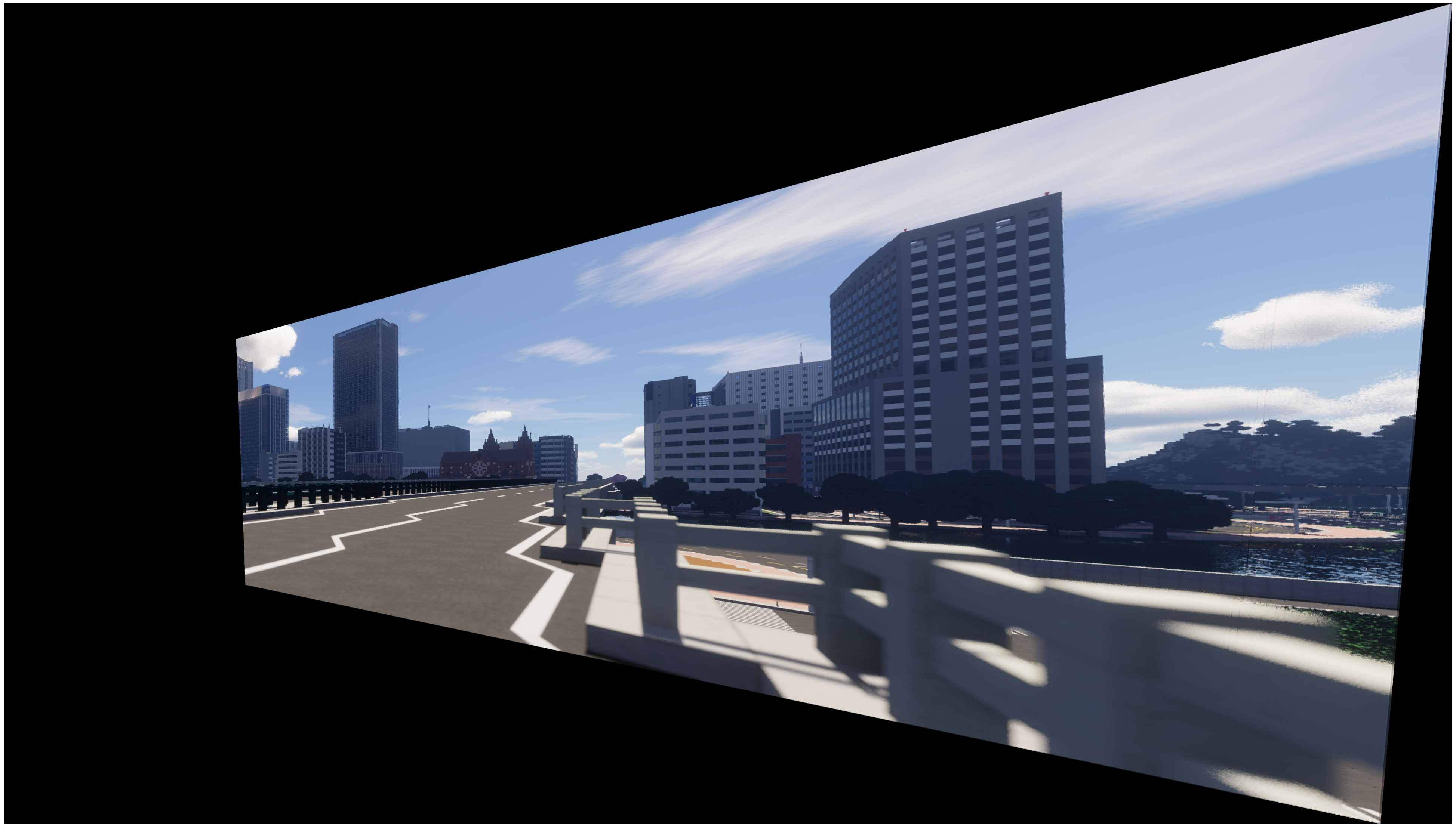

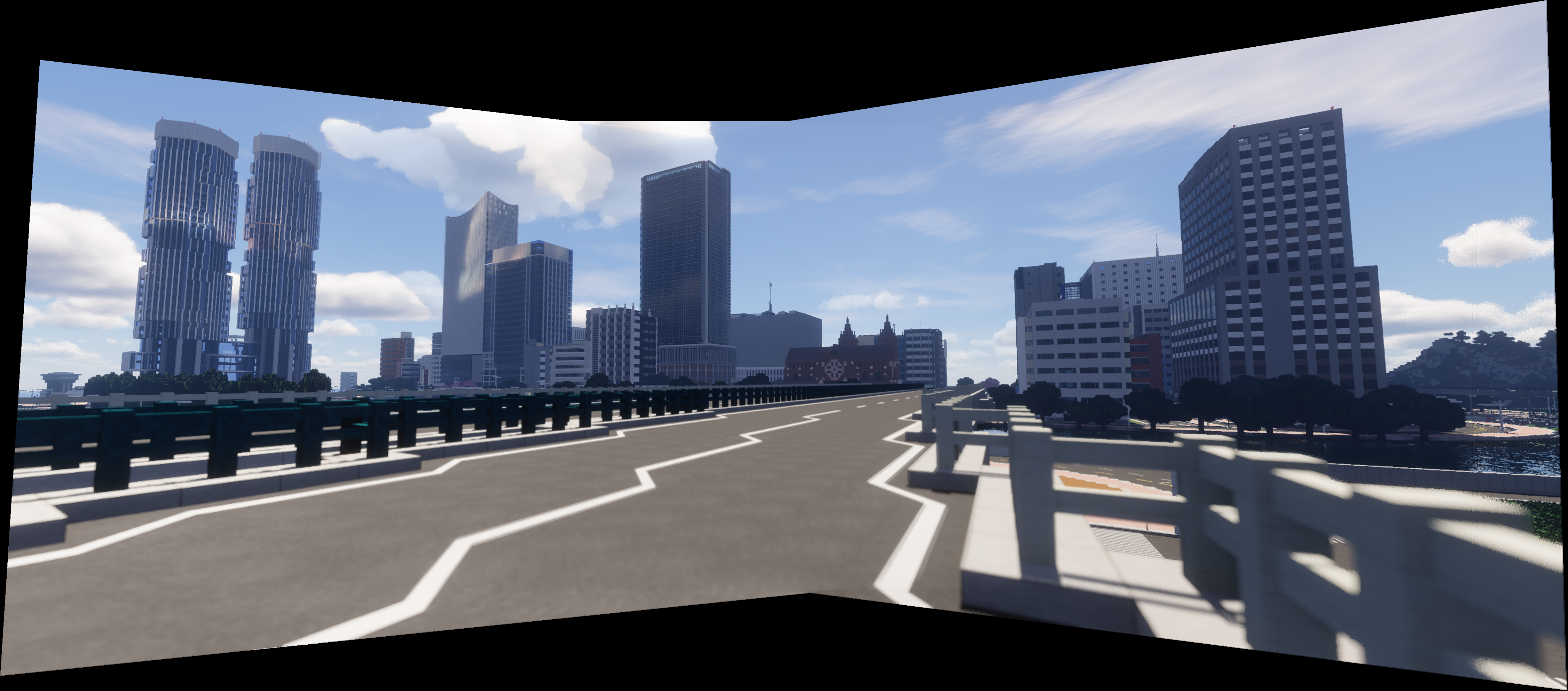

In this part, we blend the two images mentioned in part 2 and 3 into a mosaic. For a 2-image system to get a mosaic (which is not more, and is our case), we first warp the two images into the same coordinate system, and then blend the two images into one.

5.1 Alpha Blending

First, we use linear blending to blend the two images, a.k.a. Alpha blending, which is basically set 0.5 in the overlapping region of both masks and make the weighted sum.

However, we can observe apparent artifacts in the edges of the images.

We can use methods such as Laplacian Pyramid and Distance Transform.

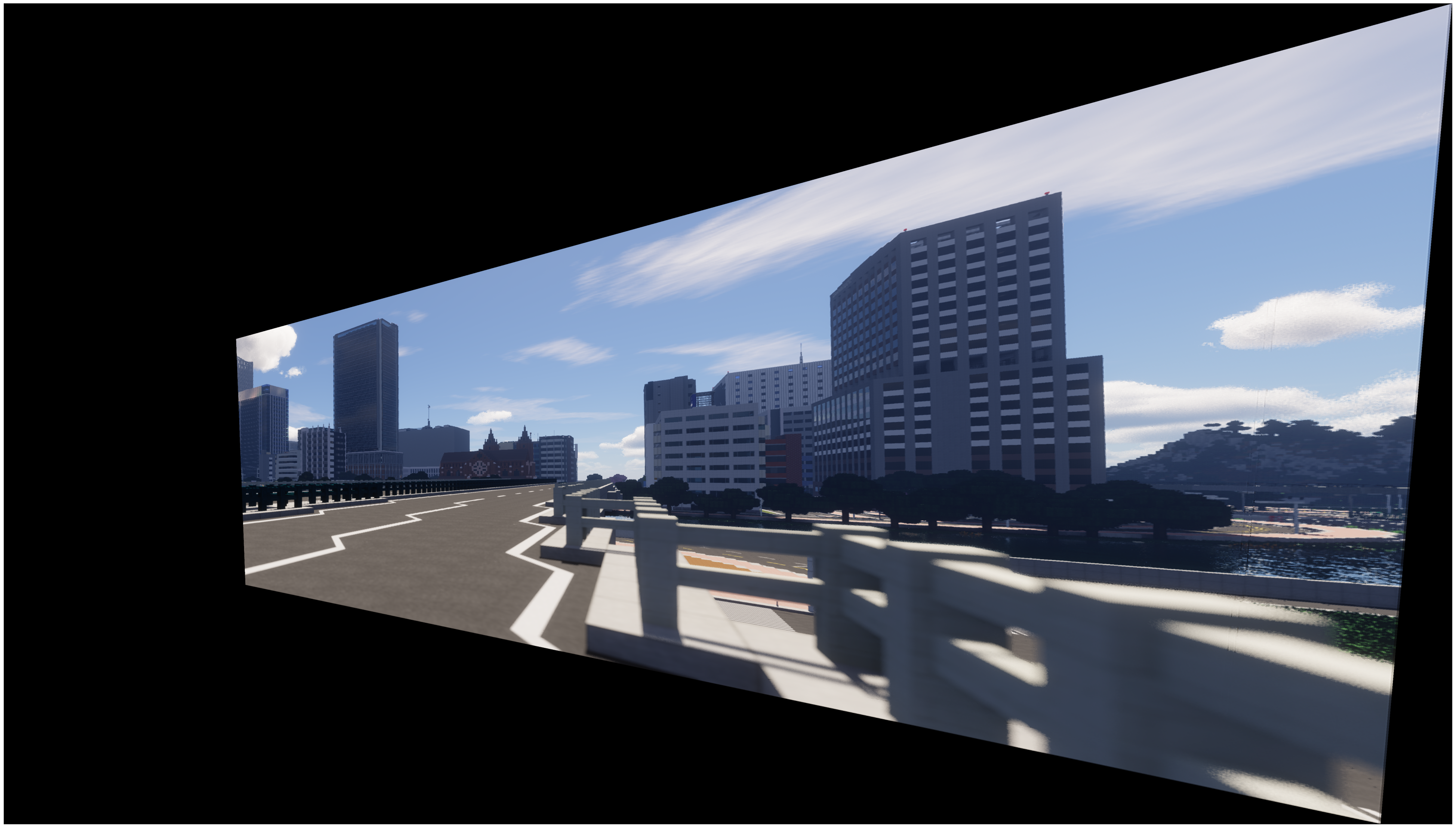

5.2 Distance Transform

We can see the two images are not perfectly aligned because of different illumination/exposure conditions and (possibly) correspondence by hand. We can see some artifacts in the final mosaic image.

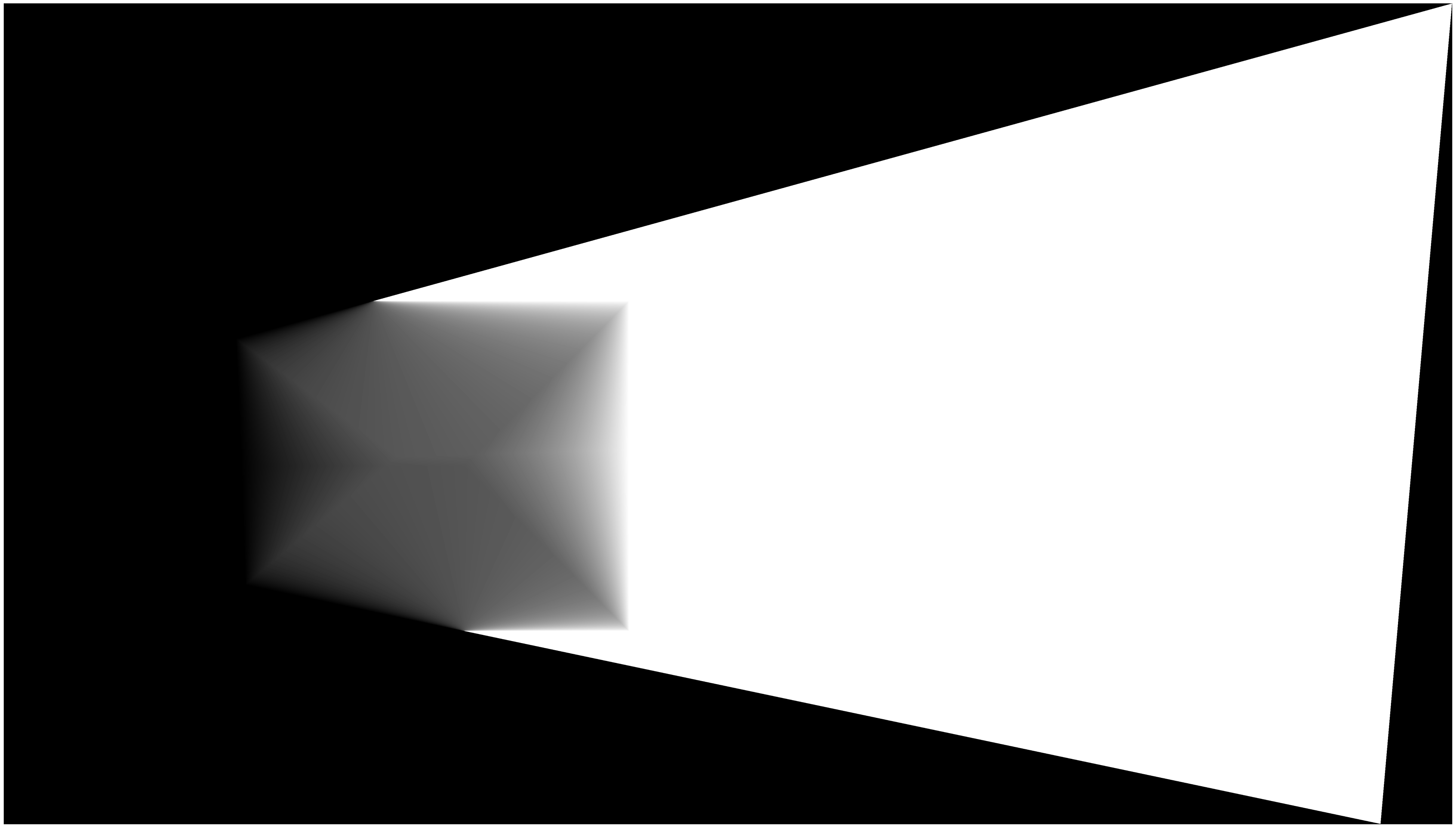

So we could use the distance transform method to blend the two images. The distance transform method is to calculate the distance of each pixel of each mask to the nearest edge (nearest zero pixel of the mask) of the two images, and blend the two images based on the distance. The closer the pixel to the edge, the more weight it will have in the blending process.

Here is step-by-step code to blend the two images using the distance transform method:

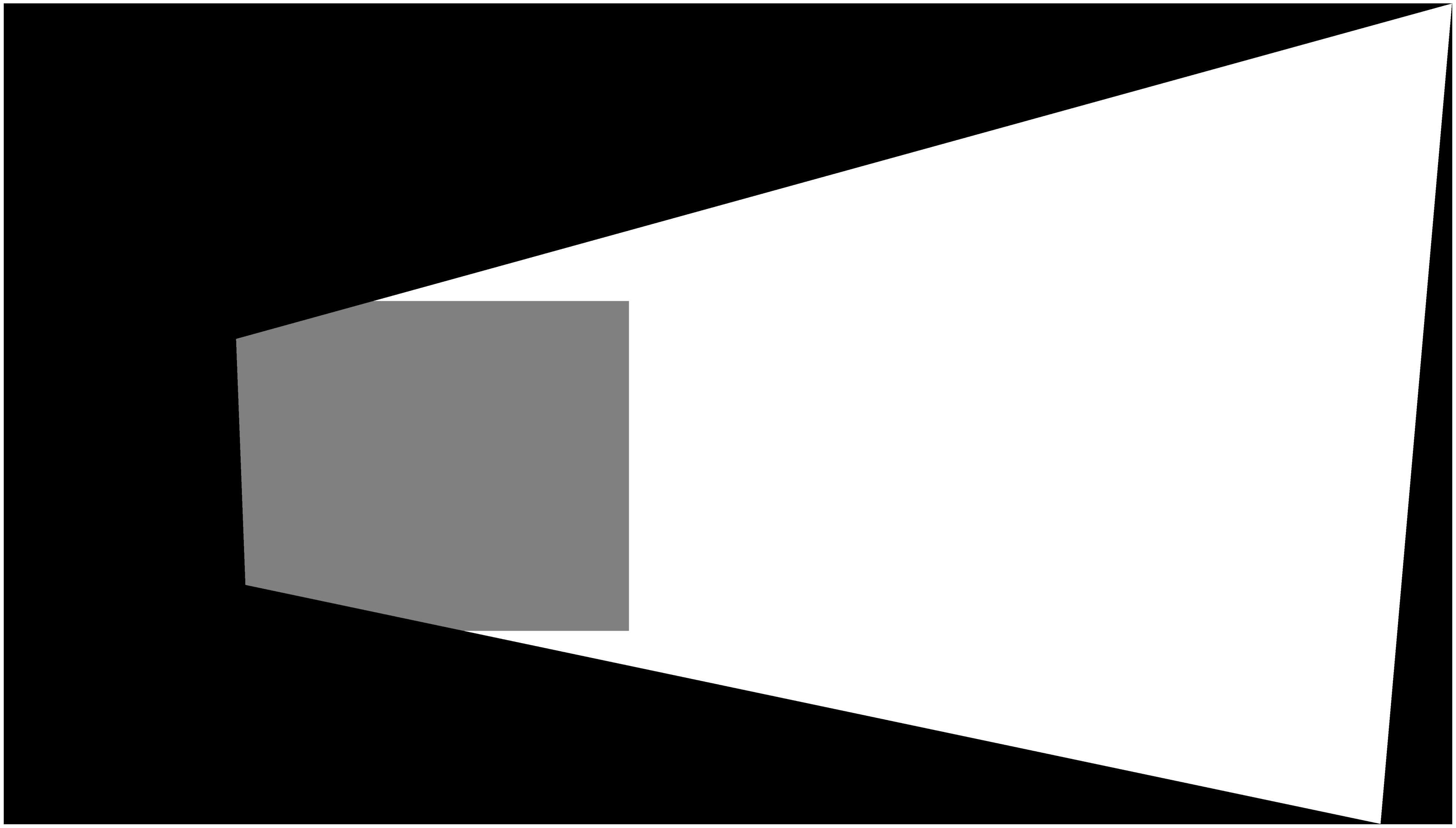

Calculate the distance of each mask to the nearest zero pixel of the mask. Let the mask be

Normalize the distance map to

Blend the two images based on the distance map. Let the two images be

Here

This seems much better.

I also implemented 3-image warping and blending.

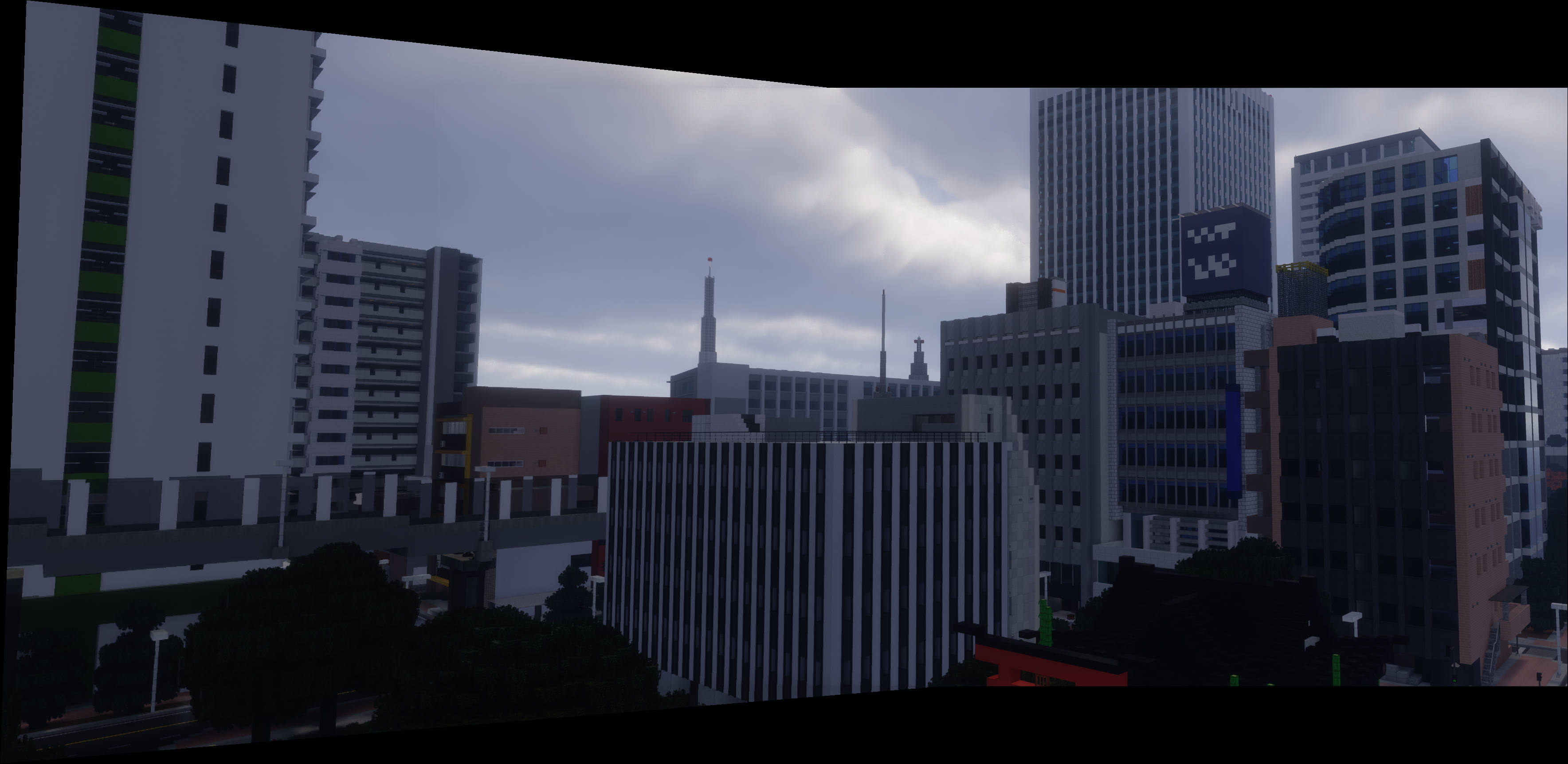

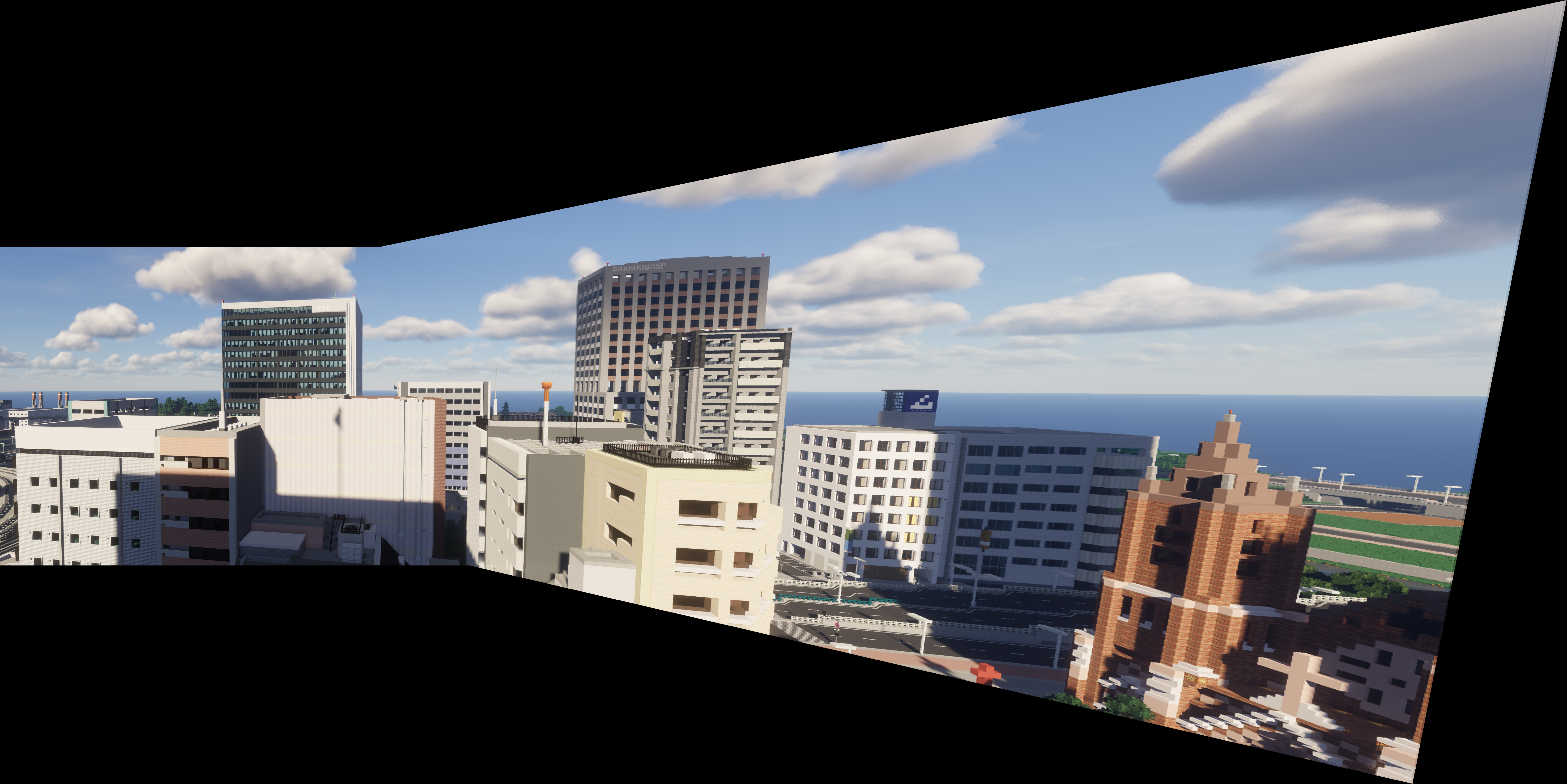

5.3 Deliverables: 3 Mosaics

Here lays my 3 done mosaics.

Mosaic 1

Mosaic 2

Mosaic 3

Part B

1. Corner detection

1.1 Harris Interest Point Detector

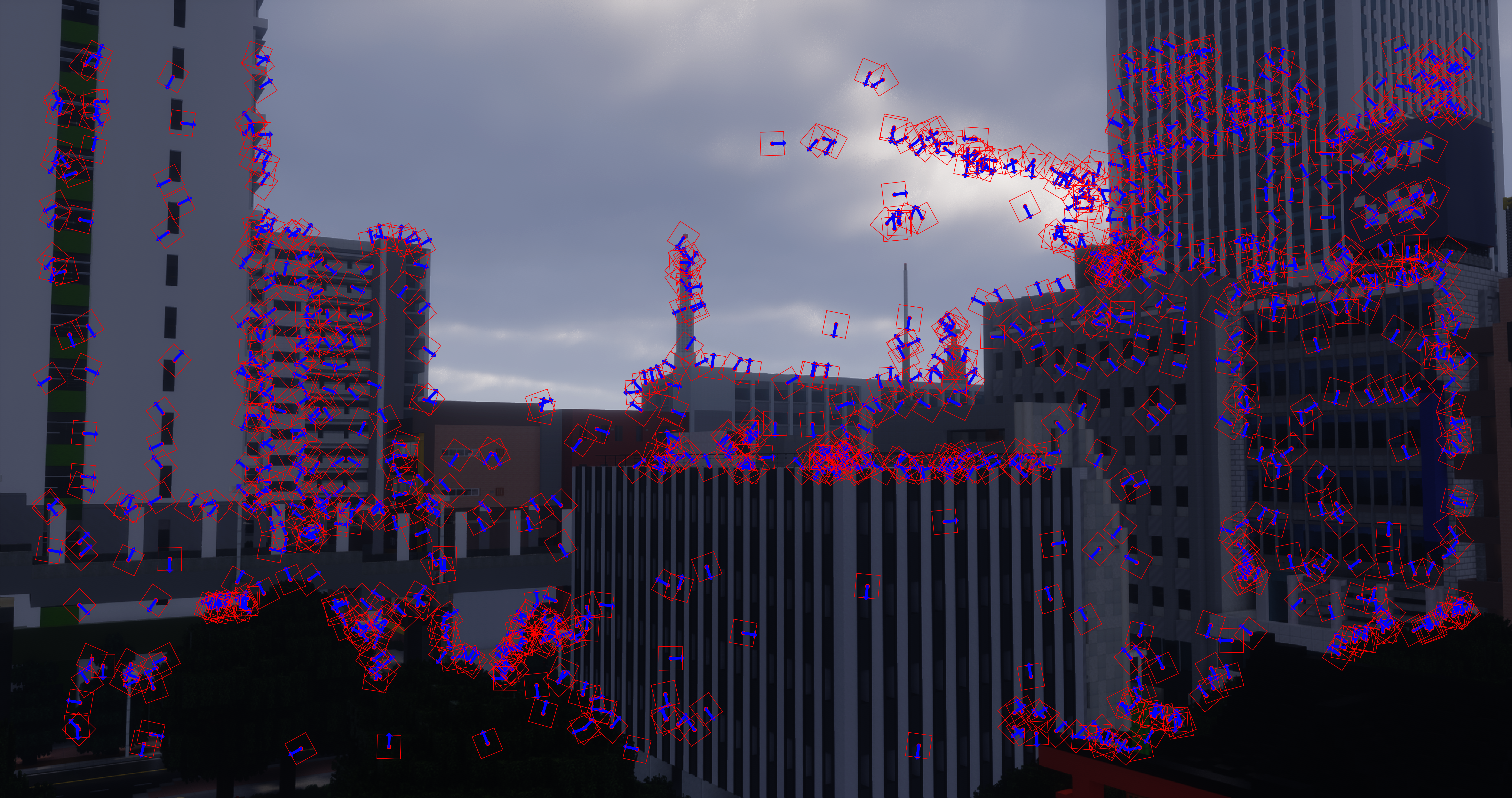

Initially, to find feature points from an image, we pay attention to the corners. Corners are the regions in the image where the intensity of the image changes in all directions. The Harris corner detection (Interest point detection) is a corner detection algorithm that is commonly used in computer vision algorithms. Below is the implementation of the Harris corner detection algorithm.

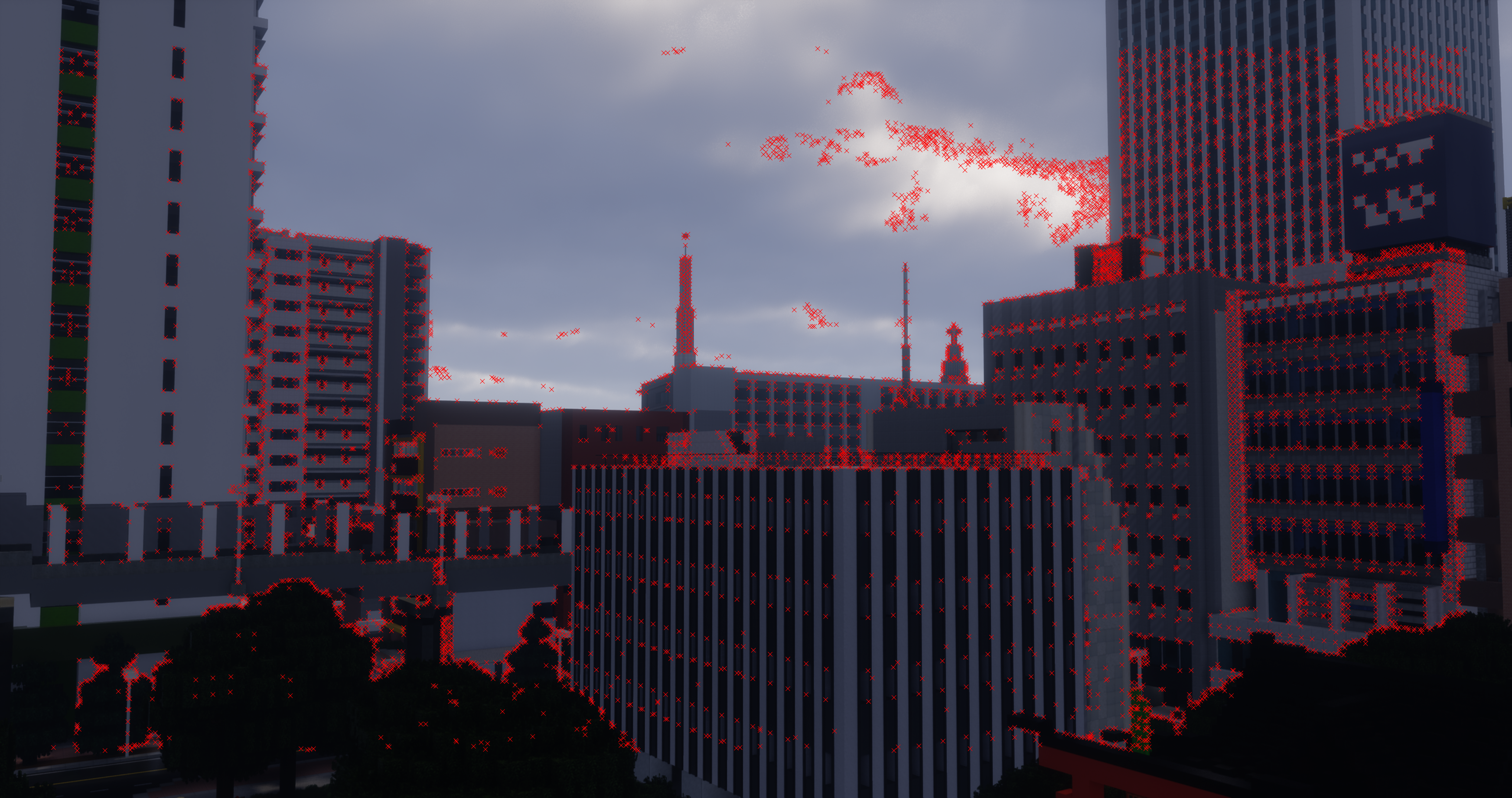

The Harris corner detection algorithm is based on thresholding and finding the local maxima of the Harris response function. We set the threshold to 0.002 times the maximum of the Harris heatmap.

1.2 ANMS (Adaptive Non-Maximal Suppression)

Harris corner detection algorithm gives us a lot of corner points. However, not all of them are useful and there are too many. Only a slightly greater than 4 feature points are needed to recover the homography from the overdetermined system.

To reduce the number of corner points, we use the Adaptive Non-Maximal Suppression (ANMS) algorithm. ANMS algorithm selects the best corner points from the corner points detected by the Harris corner detection algorithm. Below is the implementation of the ANMS algorithm.

Here is the algorithm step by step from the paper:

We are given the harris heatmap, which is a 2D array of corner scores.

For each corner, find the minimum distance to a corner with the score whose

where

Given a required number of corners

We use k-d tree to find the nearest neighbors efficiently and a heap as an ordered list so as to implement the ANMS algorithm within a reasonable time.

Also, for the hyperparameters, we choose a

2. Extracting Feature Descriptors

2.1 Axis-aligned Patches

Now we have acquired the feature points from the image. The next step is to extract the feature descriptors from the image to find matches. We use MOPS (Multi-Orientation Patch Sampling) to extract the feature descriptors. In 2.1, we first compute axis-aligned patches.

In detail, for every corner point from ANMS, we extract a 40x40 patch around the corner point. We then apply Gaussian filter to the patch and downsample it to 8x8. We then flatten the 8x8 patch to a 64-dimensional feature vector, also normalize it to have zero mean and unit variance.

We also visualize the patches without being flattened and normalized as raw feature descriptors. Note that we yield grayscale patches for matching.

2.2 Rotation-Invariant Descriptors (Bells & Whistles)

This part is independently shown in B.5.

3. Feature Matching

Now we have already extracted the feature descriptors from the image. The next step is to find the matches between the feature descriptors from the two images. We use the Squared Sum of Differences (SSD) to find the best matches between the feature descriptors from the two images. To further improve the matching and reduce the number of false matches, we use:

Symmetry Check: Check if the best match from

Lowe's Ratio Test: Check if the best match from

RANSAC (Random Sample Consensus): Find the largest set of inliers from the matches in a stochastic way.

3.1 Symmetry Check and Lowe's Ratio Test

We implement the Symmetry Check and Lowe's Ratio Test to find the valid matches between the feature descriptors from the two images. We also show the matches between the feature descriptors from the two images.

One can find that even after these checks and tricks, there are still too many unnecessary connections (correspondences).

3.2 RANSAC

We now apply RANSAC on these feature pairs. RANSAC includes these steps:

Select 4 feature pairs at random;

Compute homography

Compute inliers where

Repeat 1-3 for

Refit the homography

Note that because of the stochastic nature of RANSAC, the automatically found correspondence points can be wrong in a certain chance when mosaicing. In such case, we can rerun or fine-tune the hyperparameters in the pipeline, to get a more robust or better auto-stitching with better performance. The producing may not be successful all the time, but the implemented pipeline is able to find the correct correspondence and homography in a tractable chance.

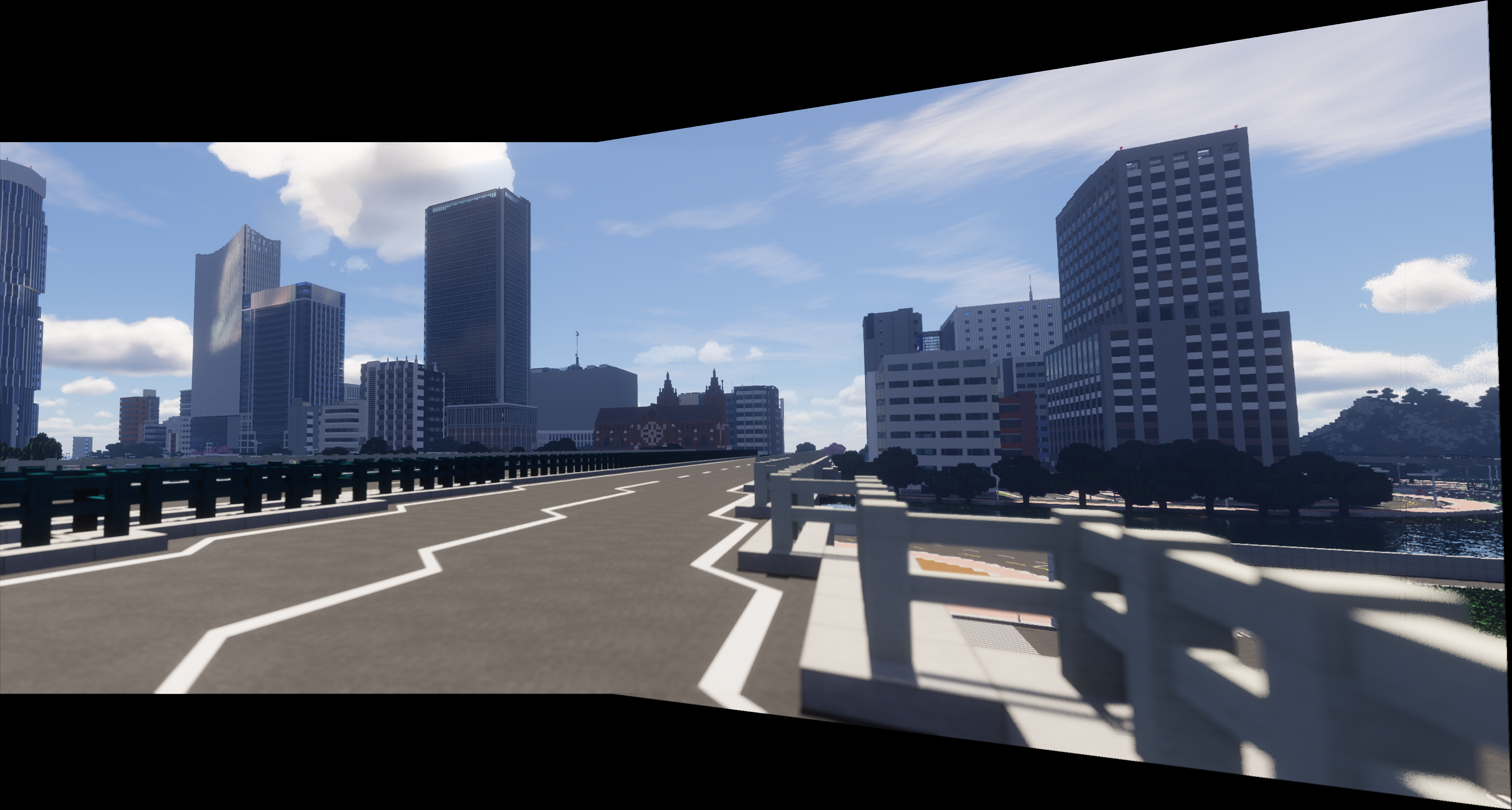

4. Mosaic Generation (Image Blending)

Finally, we generate the mosaic of the two images using the homography matrix obtained from RANSAC. We use the homography matrix to warp the first image to the second image and blend the two images to generate the mosaic using distance transform. We also show the final mosaic of the two images.

Below are the deliverables, i.e. 3 mosaics with comparison between manually and automatically stitched images.

Here we only use axis-aligned feature descriptors. For the B&W: rotation-invariant descriptors, readers may see the part B.5.

Deliverables: 3 Mosaics

5. Rotation-Invariant Descriptors

We compute the orientation of the corner points, rotate the image accordingly (which is equivalent to rotating the window) and slice from a window to make the feature descriptors rotation-invariant. The gradients and orientations are computed using the Sobel operator, from grayscale version of the image.

Deliverables: 3 Mosaics using Rotation-Invariant Descriptors

What I've learned?

In part A, I have learned the perspective projection, the importance of fixing COP while taking photos, the fine-grained details of recovering homography matrices & warping images, and the distance transform method to blend images.

In part B, I have learned various algorithms for detecting & selecting feature points, expressing features, matching features (as well as reducing them to a reasonable amount correctly).